Digibrain version 2

Introduction

In January 2002, I finished the code for an assembly language implementation of a neural network. For those who don't know, neural networks are used to create artificial intelligence. Inspired by the brain, a network of interconnected neurons is set up, with a set of inputs and outputs. The network is then trained with a training set, and learns which outputs correspond to which inputs. Then the trained network can be stored an reused anytime for making decisions. Neural networks are pariculary good at pattern recognision, as you will see in the example. I've worked out all the dirty maths and put everything into an easy to use class, which you can for any AI you need. This object provided a general-purpose feed forward neural network using back propagation. The number of inputs, outputs, hidden layers and neurons can be configured easily and all the calculations are done inside. The class has very simple methods like 'train(inputs, desired outputs)' and 'run(inputs)', as well as save & load functions for storing the learned data in a file or memory.

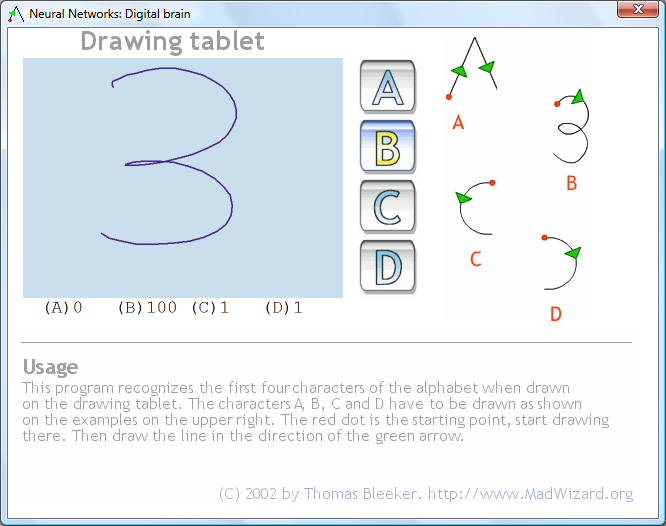

As an example I wrote a program that could recognize characters drawn with the mouse on a small tablet. It can recognize the first four characters of the alphabet, drawn with the mouse the way most handhelds do. It's a relatively simple network (20 neurons, 108 connections) but I was surprised at how well it works. Just draw an A, B, C or D on the drawing tablet, the program speaks for itself. The program can only recognize the first four characters in the alphabet. You can download digibrain.zip and try it out yourself.

Version 2

I continued developing this program to recognize all 26 characters in the alphabet and to give the user the option to train the network with custom data.

About the network

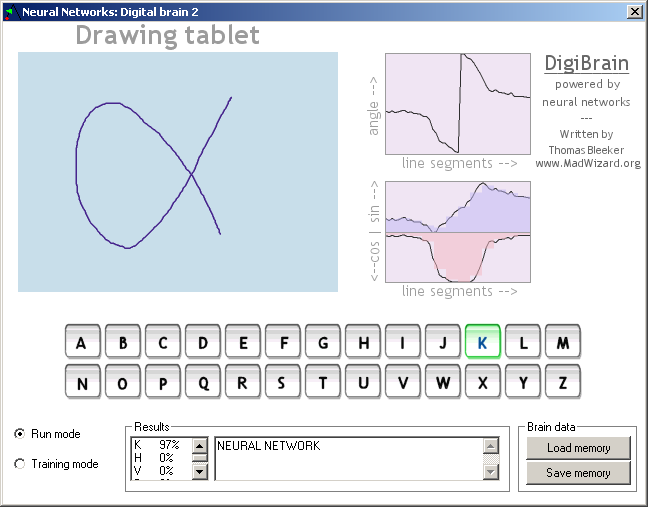

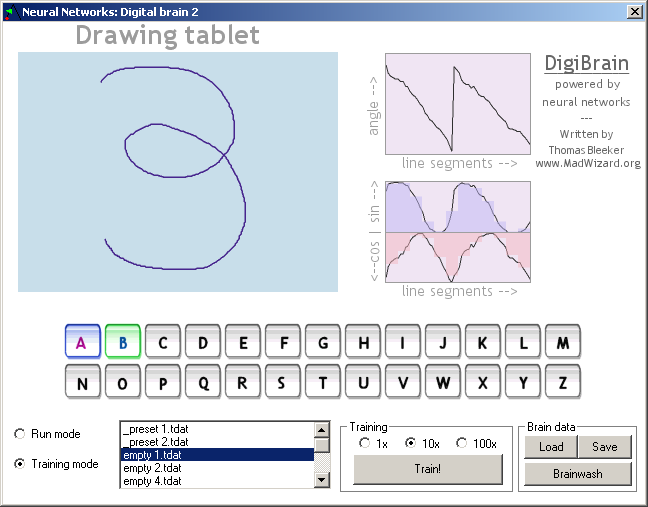

The network used is a feed-forward neural network, using back propagation. When you draw a character, a sample is taken at 10 pixel line length intervals. Then the angle between the current and the previous sample is calculated. This angle is shown in the upper graph as you draw the character.

The second step is to calculate the sine and cosine of each angle value sampled. The result of this is shown by the black lines in the second graph on the main window. From this data, 12 averages are calculated for both the sine and cosine graph. This is shown by the blended blocks drawn over the graph when you finish drawing.

Finally, these 24 input values (12 sines, 12 cosines) are fed to the network. Besides the 24 inputs, the network has one hidden layer of 15 neurons, and one output layer of 26 neurons. Ideally, only one output should be 1 indicating which character was drawn, and all the other should be zero. Of course this level of accuracy is almost never achieved but enough to give a decent recognition.

Quick start

Start digibrain.exe, click the load memory button on the bottom right, locate characters.brain in the same directory as digibrain and open it. The digital brain has now loaded pre-trained data and can recognize the characters you draw on the drawing tablet (top left).

Notes:

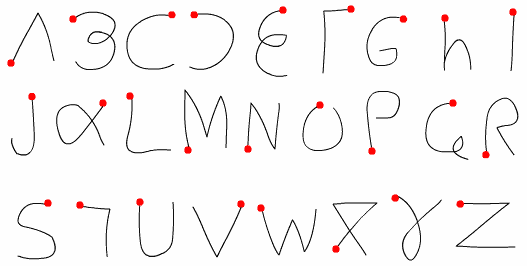

- Each character needs to be drawn as one continuous line exactly as trained (see image below)

- The drawing direction is important, drawing a V from left to right is very different from drawing it right to left.

- The size of the drawing does not affect recognition, but don't draw the characters too small as the program will not be able to get enough good samples then.

How to draw the characters

(assuming you've loaded characters.brain)

In the image above, the complete alphabet is drawn as the network stored in characters.brain is trained. The red dot indicates the starting point of each character.

Results

As you draw the characters, you will see that two windows on the bottom of the window will show some result information. The list box (left) shows a list of the 10 characters that look most like the character you've drawn. The best match is shown on top. The edit field shows all characters recognized so far, you can 'draw' a word that way. In the complete alphabet shown, the best match will be highlighted green.

(Pssst....wanna know a secret? Above the blue drawing tablet, where the text 'drawing tablet' is, are two hidden click 'zones'. When you click left from that text, the last character in the result edit field is deleted (effectively a backspace). Similarly, clicking right from the text adds a space to the edit field. Using both you can draw a complete sentence and undo mistakes without using the keyboard :)

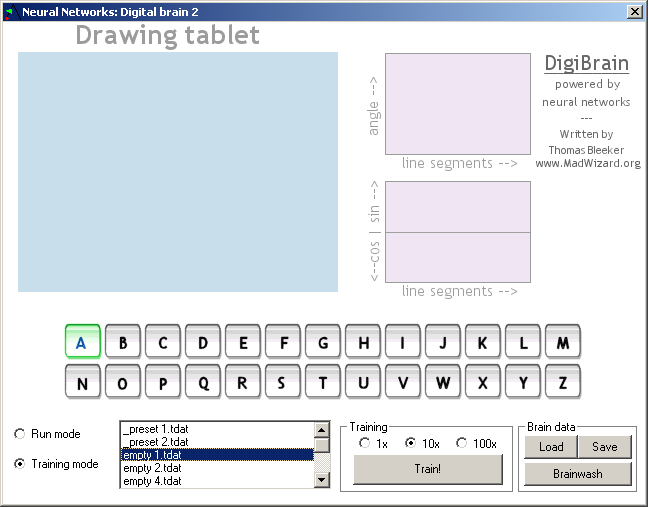

Training the network yourself

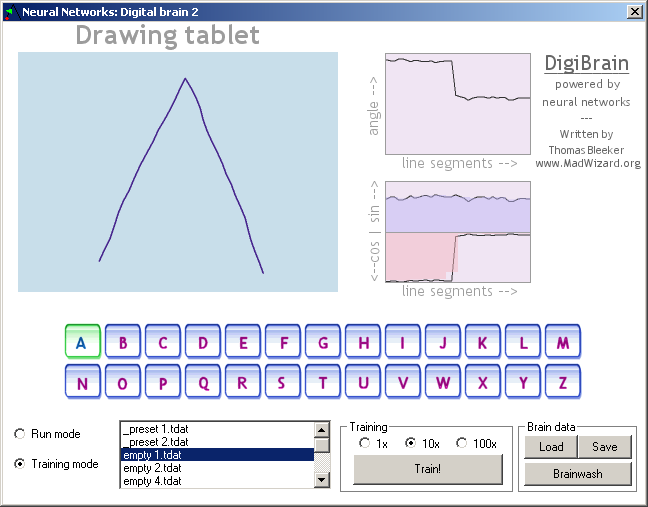

To train the network, select the training mode radio button. This will show some controls as in the image below. Training is done by creating a training set (a set of drawings for the complete alphabet) and training the neural network with it.

The items are:

- Alphabet

- Just like in run mode, the complete alphabet is shown. However, in training mode you can select a character and see the stored drawing for it if it's available. The colors of the characters have special meanings; gray means that in the current training set, no drawing for that character is available (yet); blue means such a drawing is available; green is the active (selected) character.

- List with training files

- Training sets are stored as .tdat files in the train_sets subdirectory of digibrain. This list box lists all files in that directory. Currently digibrain doesn't have an option to create more of these files but you can simply copy existing files to new ones and modify them. The _preset x.tdat files are the ones I used for training the network as stored in characters.brain. The _numbers ;-).tdat file is another example with the numbers 0-9 set as characters. The others (empty x.tdat) are for your own experiments.

You can choose a training set by simply selecting one. - Training options

- In the training frame a big Train! button is present. When you click this button, the currently active training set is used to train the network. Note that you can use multiple trainings on the same network (this even produces better results). The training just adjusts the network with its data, but doesn't completely overwrite it.

The 1x, 10x and 100x radio buttons can be used to set the number of times the training set is presented to the neural network. - Brain data

- The brain data frame shows three buttons: load, save and brainwash. The first two buttons load or save the currently trained neural network from or to a file.

The brainwash button initializes the network with random data, cleaning the brain from any previous training. Always use this button if you want to start training on an 'empty' brain. This is also done when you've just started digibrain.exe.

To make a training set, click on one of the empty x.tdat files in the training set file list. Then click on a character in the alphabet and draw it on the tablet (each drawing overwrites the previous one). The training file is saved as you modify it, so you don't have to save it yourself.

Once you've created a drawing for all characters, use the Train ! button to train the network with your custom training set. If you want to start with a clean brain, push the brainwash button first. You can use multiple trainings and training sets, each training slightly adjusts the neural network.

If you want to store your complete trained network, use the Save button in the brain data frame on the bottom right.

(training set with two characters set)

(training set with all characters set)

Training tips

- Make the characters as different as possible, so they are better distinguishable for the network.

- Use multiple training sets and train with them in turns to get some variation in the training data.

- Play a bit with the amount of training. Too much training or not enough training both give worse results.

Source code

The source code is written for MASM32 version 7. A custom version of my own PNG library and the OOP macros are included as well. Be sure to set the right paths in make.bat to be able to assemble the program.

Credits

I wrote most of the program myself, including the complete neural network object, the digibrain program and the PNG library. The assembly OOP includes were developed by Jaymeson Trudgen (NaN) and me. The NonUniformAverages function was written by bitRAKE. Finally, a site that helped me a lot with the neural network was generation5.org. J. Matthews's essay on neural network is excellent!

Two discussions on the win32asm community message board about the first and second version of digibrain can be found here and here.

Downloads

Download digibrain.zip (version 1)

Download digibrain2.zip (version 2, read the documentation first!)

Download nnet.zip (win32asm neural network library)